July 02, 2025

Blog PostMediaWiki Maintenance Tips

As highlighted in our MediaWiki Upgrade Policy post, regular updates to both the MediaWiki core and its extensions are crucial to maintain the stability, performance, and security of your wiki. However, is there a need for maintenance beyond software upgrades in MediaWiki?

Out of the box, MediaWiki provides around 200 scripts designed for various maintenance tasks. Additionally, many other maintenance scripts can be delivered by extensions. These scripts can be scheduled or executed manually to address errors, resolve discrepancies, update or back up data, optimize performance, clean up spam, and more.

Unlike many other Content Management Systems (CMS), MediaWiki's approach to maintenance encourages the use of the command line. Currently, web-based interfaces for running maintenance scripts are either abandoned or not mature enough for use in a production environment.

The standard location where MediaWiki stores its maintenance scripts is the “maintenance” directory in the MediaWiki root. Given that MediaWiki is written in PHP, scripts are meant to be run using the following format (as of MediaWiki 1.39):

php maintenance/nameOfTheScript.php

Note: With great power comes great responsibility. Exercise caution when running any script, especially in a production environment. Always refer to the script documentation. Typically, running a script with the "--help" flag provides available options and information, facilitating a better understanding of the script's purpose, functionality, and parameters.

php maintenance/nameOfTheScript.php --help

The extensions are used to store their maintenance scripts in the “maintenance” directory in the extension root:

php extensions/NameOfExtension/maintenance/nameOfTheScript.php

Now that we have a foundational understanding of where the scripts are located and how to launch them, let's delve a bit deeper.

Job Que

MediaWiki background jobs are tasks executed asynchronously in the background to perform various operations that might take significant time. Instead of processing these tasks immediately upon user request, they are queued and processed later to avoid impacting the user experience. By default, jobs in the queue are run as a function of user interaction with the wiki. On wikis with with large amounts of data, slow traffic, or under development, there may be an exceptionally large number of jobs to clear.

# To get a number of jobs in the queue

php maintenance/showJobs.php

# To get an extended ststistics about the jobs state by type

php maintenance/showJobs.php --group

If you see a lot of jobs in the queue, you can process them by running a script.

# To process jobs in the queue imperatively

php maintenance/runJobs.php

It supports the following parameters:

--maxjobs: Maximum number of jobs to run

--maxtime: Maximum amount of wall-clock time

--nothrottle: Ignore job throttling configuration

--procs: Number of processes to use

--result: Set to "json" to print only a JSON response

--type: Type of job to run

--wait: Wait for new jobs instead of exiting

An excessive number of jobs in the queue can result in delays in content updates and other inconsistencies. Therefore, it is advisable to set up a cronjob, shell script, or a system service to process the queue in batches in the background. For more information, please refer to the documentation.

The manageJobs.php script serves as a maintenance tool for manually deleting jobs from the job queue or putting abandoned jobs back into circulation. It's important to note that while the script can return abandoned jobs to circulation, it won't process them. To actually execute them, you need to run runJobs.php.

Database Maintenance

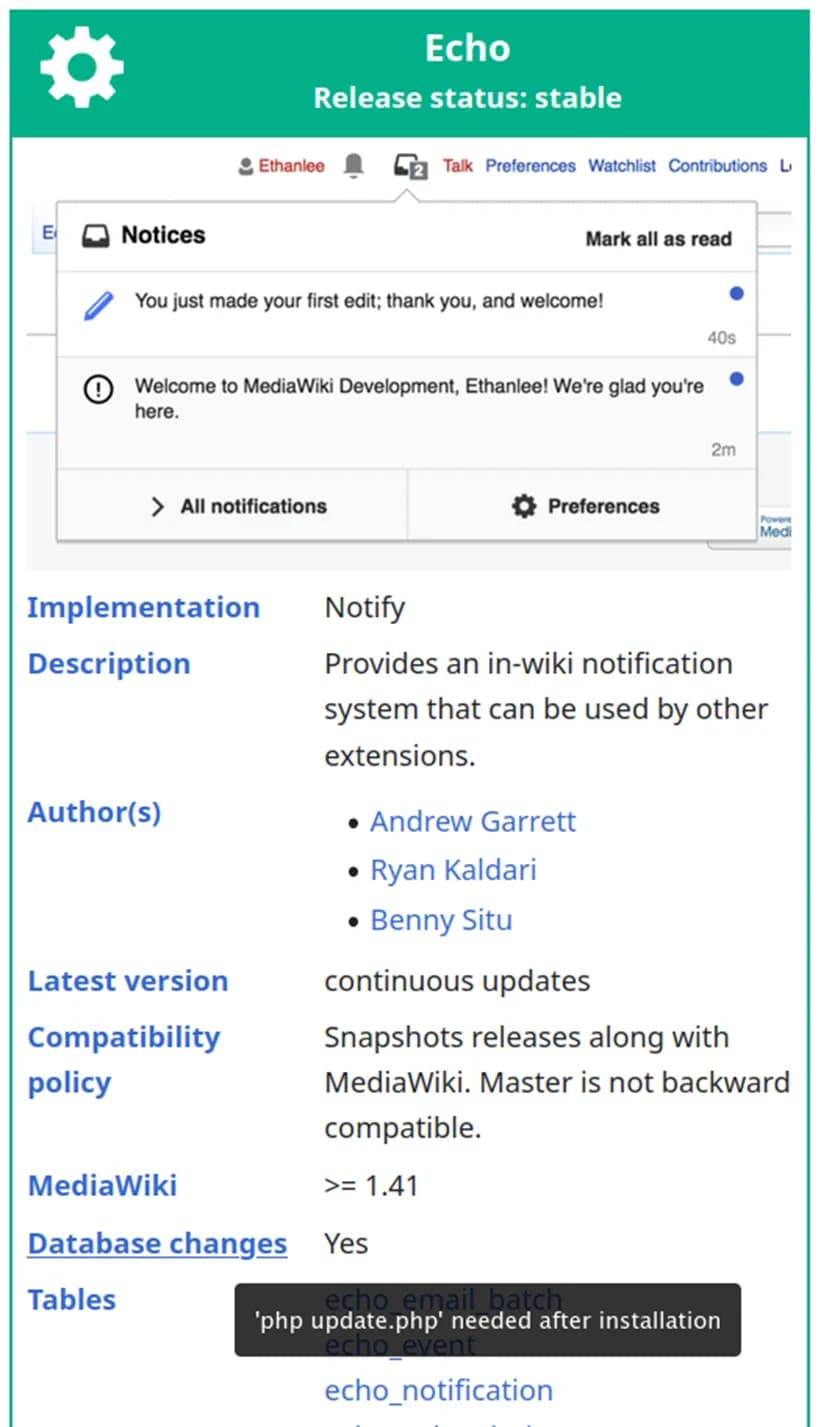

During major upgrades or upon the installation of certain extensions, MediaWiki often requires database schema upgrades, including the addition or redesign of tables, fields, data types, or keys. In such cases, running an update script is mandatory:

php maintenance/update.php

When installing extensions, pay attention to the "Database changes" section of the extension page infobox. If it indicates "Yes," running the update script is necessary.

Data Backups

MediaWiki data backups are crucial to protect against data loss from accidental deletion, hardware failure, or cybersecurity threats. Key areas requiring backup include:

- the database,

- uploaded files, and

- configuration/customizations

An effective backup policy, coupled with suitable tools, ensures business continuity during unforeseen disruptions. As a best practice, backups should run regularly and automatically, with a frequency tailored to the wiki's use case and the criticality of data safety. Storing backup data externally is vital to mitigate the risk of data loss in the event of local disasters, such as fires or hardware failures.

For the wikis maintained by WikiTeq, we employ the Restic dockerized service along with S3-compatible cloud storage to configure, execute, store, and rotate backups.

Consider the following examples for guidance and inspiration:

# To backup database

mysqldump -u {wikiuser} -p {wikidb} > ~/{wikidb}.$(date +"%m.%d.%Y").sql

# To backup uploaded files

tar czvf ~/images.$(date +"%m.%d.%Y").tar.gz {w}/images

# To view a list of snapshots created by restic in the S3 storage

restic -r 's3:{endpoint}/{bucket}' snapshots

Remember to customize the commands for your specific environment by opening the curly brackets and inserting the appropriate values.

Data Exchange

There is the MediaWiki maintenance script that dumps the wiki pages into an XML interchange wrapper format for export or backup.

# Backup current revisions of all templates to the file

php maintenance/dumpBackup.php --current --filter namespace:10 --output file:dumpfile.xml

The exported pages can be eventually imported to the current or another MediaWiki instance.

php maintenance/importDump.php < dumpfile.xml

Please note that the dumpBackup.php doesn’t create a full database dump! For full backup, refer to the documentation.

Another script can import files and / or images into the wiki in a bulk manner. It will put them in the upload directory and create wiki pages, as if they were uploaded manually via Special:Upload.

# Import all png images from “My Pictures” and subfolders if they are not uploaded yet

php maintenance/importImages.php --skip-dupes --extensions png,jpg --search-recursively "My Pictures"

Other types of the data exchange functionality is supplied by extensions:

Data Update

There are several scripts designed for data rebuilding and cleanup purposes:

rebuildrecentchanges.php - rebuilds the recent changes and related tables.

php maintenance/rebuildrecentchanges.php

rebuildall.php - rebuilds various caches and indexes to ensure data consistency after making changes after certain configurations or extensions are updated.

php maintenance/rebuildall.php

rebuildtextindex.php - rebuilds search index table from scratch

php maintenance/rebuildtextindex.php

refreshLinks.php - updates links tables after changes to the wiki's content.

php maintenance/refreshLinks.php

initSiteStats.php - initializes or updates site statistics, including page count and user count.

php maintenance/initSiteStats.php --active --update

deleteOrphanedRevisions.php – reports or deletes revisions which refer to a nonexisting page

php maintenance/deleteOrphanedRevisions.php --report

deleteArchivedRevisions.php - permanently deletes archived revisions that have been removed from the recent changes.

php maintenance/deleteArchivedRevisions.php --delete

cleanupTitles.php - cleans up the database from titles that do not exist in the content table.

php maintenance/cleanupTitles.php --dry-run

Remove the `--dry-run` switch to actually delete the orphaned titles.

Combating Spam

cleanupSpam.php - removes all spam links, which are pointing to a given hostname. In case a page contains a link to that host, the script goes backwards in the history of the page, until the host is no longer on the page. The script will then revert the page to that revision.

php maintenance/cleanupSpam.php example.com --all --delete

blockUsers.php - blocks or unblocks a batch of users. It can use STDIN or the file argument. This is probably most useful when dealing with spammers, with a list you've generated with an SQL query or similar. By default, all users are hard blocked, auto blocked from any current and subsequent IP addresses, email disabled, unable to write to their user page and unable to create further accounts with no expiry to this block. You can change these configuration through options.

php maintenance/blockUsers.php userlist.txt --performer "Spam Warrior" --reason "Vandalism"

purgeExpiredBlocks.php - removes expired IP blocks from the database.

php maintenance/purgeExpiredBlocks.php

Sitemap

Sitemaps are files that make it more efficient for search engine robots to crawl a website.

generateSitemap.php - generates a sitemap for a MediaWiki installation.

By default, the script generates a sitemap index file and one gzip-compressed sitemap for each namespace that has content.

# to see options that can be passed to the script.

php maintenance/generateSitemap.php --help

You may need to set up a cron job to update the sitemap automatically.

Do not forget to make the sitemap findable by any crawler by adding a link to the sitemap index to your site root directory e.g.:

ln -s /sitemap/sitemap-index-example.com.org.xml sitemap.xml

MediaWiki Maintenance Best Practices

When devising the most appropriate maintenance plan for your MediaWiki, please ensure adherence to the following guidelines:

- Schedule regular database backups to prevent data loss. The frequency of backups will depend on the activity and criticality of your wiki.

- Regularly review user accounts and user contributions. Remove inactive or spam accounts as needed. Monitor and review the content on your wiki, addressing spam, inaccurate information, or inappropriate content.

- Configure and optimize caching mechanisms to improve page load times. MediaWiki supports various caching systems, including opcode caching and object caching.

- Use tools like Matomo or other analytics solutions to monitor user behavior and traffic patterns. This information can be valuable for making informed decisions about content and performance improvements.

- Monitor error logs for any issues and address them promptly. Regularly check the error logs for warnings or errors that may indicate underlying problems.

- Conduct regular audits of your MediaWiki configuration, ensuring that settings align with best practices and security recommendations. Monitor the MediaWiki security mailing list and apply security patches promptly.

- Before applying updates or major changes to the production environment, test them in a staging or testing environment to identify and address potential issues.

Check out our other informative blogs and start your journey on becoming a MediaWiki Professional and dont hesitate to schedule a free no-obligation call with us!